What is the shape of progress in ideas?

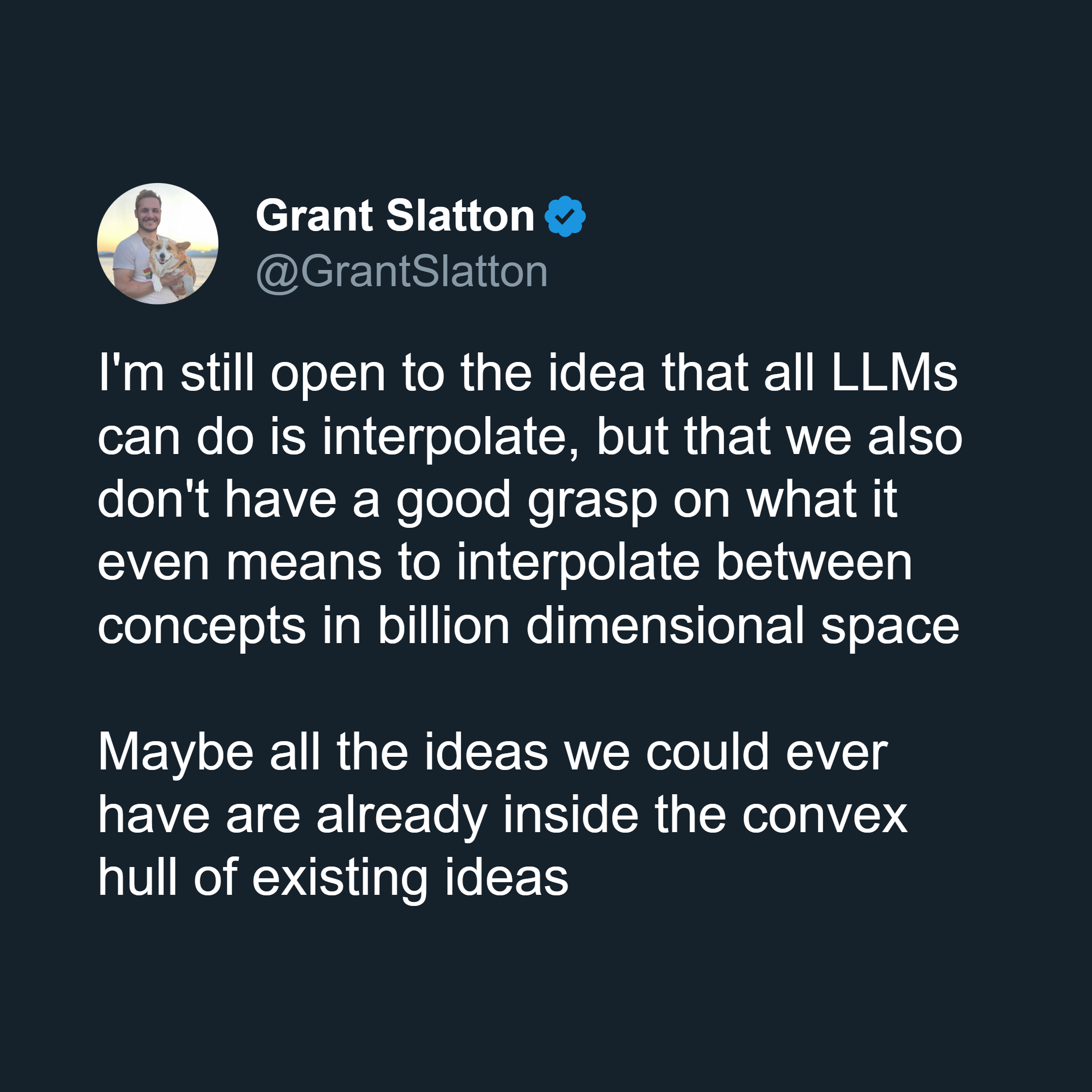

Given the effectiveness of LLMs in generalizing past the training data, one might expect that all the ideas we might care to look for are in the latent space implied by existing ones.

If we analogized this to a high-dimensional space, this could mean that all useful ideas are within a convex hull produced by existing ones. This is obviously not quite true, since the ideas which make up the corners of that convex hull needed to be generated by somebody, and so there's probably more out there. There's another version which also could be true: that when you process enough existing ideas, you can generalize to a new set of ideas which are implied by your priors. I also find this idea a bit suspect, since it seems to also ignore the existence of the ideas which shaped your priors in the first place.

But we might ask the broader question: what does it look like to generalize from your set of current ideas to new ones?

I'd almost want to contest the structure of the question: you don't generalize directly from ideas to ideas, you have to accumulate evidence at some point.

The simplest possible way to get this evidence is to condition on other ideas: you generalize a little bit, expanding your range of possible thought, update on whether that was helpful, and if so, expand further. You can repeat this process as many times as you want to claim new, valid territory in ideaspace. I think something involving this sort of iterative expansion is necessary; you can't just derive new ideas from the ether. See Why Greatness Cannot Be Planned for more justification.

Alternatively, we could see ideas through the lens of search: by counting bits of uncertainty. Outside your knowledge, there is a vast sea of possible hypotheses which you have priors towards with various degrees of credence. When you either generalize from existing knowledge or gain new knowledge, those hypotheses lose uncertainty and some collapse into new knowledge. This doesn't happen all at once, your mind has to step through some sort of belief model to notice which implications have changed.

Related to the questions of what's happening in the space of possible ideas and the space of possible minds, Tsvi wrote a great set of research notes on whether there might exist "Cognitive Realms": "unbounded modes of thinking that are systemically, radically distinct from each other in relevant ways." These modes might involve differences in the shape of how an agent makes progress in ideas, deeply engrained enough that it's not really possible for them to "steal strategies" from another, differently structured agent.

I think there's work to be done in finding an art of thinking which mechanistically understands how progress in thought is made, and then effectively uses that to make quicker progress. Rationality aimed for this but was almost dead on arrival, due to what seem to be mostly social considerations. I suspect a further refinement of the art would focus more on having good feedback loops and broader structure of thought than on maintaining exact precision in how evidence is used. Rationality's focus on precision was important, to be sure: the thing that focus illuminated was something like epistemic necessity. But a good next step might be to figure out how to properly expand your domain of knowledge. And that requires a hell of a lot more than just Bayes' Theorem.